This week’s episode of "The AI Artifacts Podcast" kicks off with fresh news that Sam Altman was ousted as CEO at OpenAI on Nov. 17. Brian and Sarah get into the known details and review other stories, including Ed Newton-Rex leaving Stability AI and Sundar Pichai’s comparison of AI to climate change.

Then, the podcast welcomes Jade Newton, an AI data expert who has worked at large-scale companies in a variety of contexts, as she discusses the future of AI regulation. Get a deep look into standards for responsible use, as well as a very special dog-themed edition of "Two truths and l’AI."

Timestamps for this episode:

[0:00] Intro

[0:27] Breaking news from Friday on Sam Altman being pushed out at OpenAI

[5:07] Altman confirmed plans for GPT-5, though training has not yet begun

[7:15] Ed Newton-Rex leaves Stability AI, criticizing fair use approaches toward training data

[12:22] Sundar Pichai compares AI to climate change in regulation context

[17:44] "Two truths and l’AI: Dog edition"

[25:36] Interview with Jade Newton

[28:45] What does "responsible AI" mean?

[32:33] How to solve issues of access

[36:26] Best and worst ideas for regulatory action

[45:43] How encryption and data privacy concerns relate to AI

[48:14] Risk of regulatory capture by incumbents

[51:39] Regulation in the European Union

[55:20] Best practices for companies implementing AI

Links for topics referenced in this episode:

OpenAI’s board fires Sam Altman as CEO (The Verge): https://www.theverge.com/2023/11/17/23965982/openai-ceo-sam-altman-fired

Official OpenAI announcement about Altman leaving (OpenAI): https://openai.com/blog/openai-announces-leadership-transition

Podcast episode from 2019 where Brian and Sarah interviewed Mira Murati, who is now OpenAI’s interim CEO (Apple Podcasts):

Altman confirms plans for GPT-5 at OpenAI (Decrypt): https://decrypt.co/206044/gpt-5-openai-development-roadmap-gpt-4

Ed Newton-Rex quits at Stability AI (BBC): https://www.bbc.com/news/technology-67446000

Sundar Pichai makes AI and climate change comparison (CNBC): https://www.cnbc.com/2023/11/16/google-ceo-sundar-pichai-compares-ai-to-climate-change-at-apec-ceo-summit.html

Microsoft makes Bing Image Creator changes after Disney copyright complaints (Ars Technica): https://arstechnica.com/ai/2023/11/fake-movie-posters-with-disney-logos-force-microsoft-to-alter-bing-image-creator/

Meta’s AI tools enable dog additions to any photo (Mashable): https://mashable.com/article/meta-ai-tools-emu

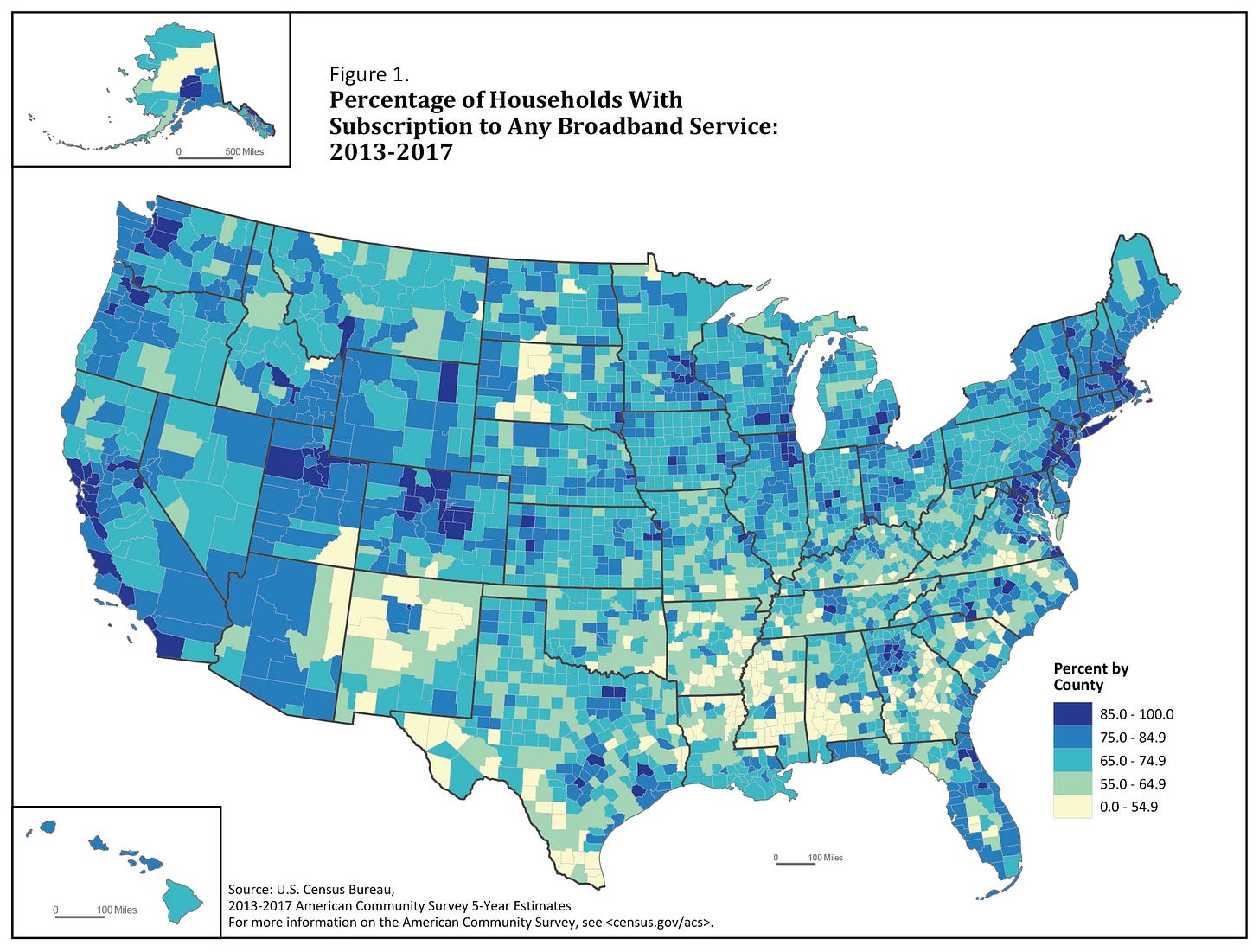

U.S. Census data on broadband internet access in 2018 (Census.gov): https://www.census.gov/library/stories/2018/12/rural-and-lower-income-counties-lag-nation-internet-subscription.html

Summary of individual parts in Biden’s executive action on AI (The Markup): https://themarkup.org/news/2023/11/01/the-problems-bidens-ai-order-must-address

Additional note on the White House’s executive action from Jade:

I think that people need to understand that the White House's executive order on AI is a good first step to putting guardrails in place when it comes to developing, testing, training, and optimizing emerging technologies that use machine learning.

However, I also think that this executive order is very broad and there is this thinking that AI can solve systemic issues (think discrimination/bias/access, etc.). AI is not going to fix systemic racism, sexism, xenophobia, education funding, etc. These are issues that are rooted in the fabric of what America was designed to be. AI cannot change people's mindsets. And despite the daily advances of technology, there will always be a need for a human-in-the-loop. AI is a tool -- it is not the ultimate problem solver. Humans can use AI to solve some problems, but ultimately AI can't do it on its own.I think it is important for those at the table (i.e., NIST, and whoever else the White House appoints to the committee(s) that are ensuring implementation of these guardrails to understand the above. AI can only do what the humans tell it to do. And the systemic issues that are rampant in American culture are too complex to be solved by technology.

Jade Newton on LinkedIn: https://www.linkedin.com/in/j3n3r3/

More links to news that didn’t make the show:

Discord gets rid of its AI assistant Clyde (The Verge): https://www.theverge.com/2023/11/17/23965185/discord-is-shutting-down-its-ai-chatbot-clyde

Microsoft Ignite announcements related to AI (The Verge): https://www.theverge.com/23961007/microsoft-ignite-2023-news-ai-announcements-copilot-windows-azure-office

Reactions to the Humane AI pin (Axios): https://www.axios.com/2023/11/14/humane-ai-pin-smartphone-replace

Music used in this podcast comes from "Vanishing Horizon" by Jason Shaw and is licensed under an Attribution 3.0 United States License.